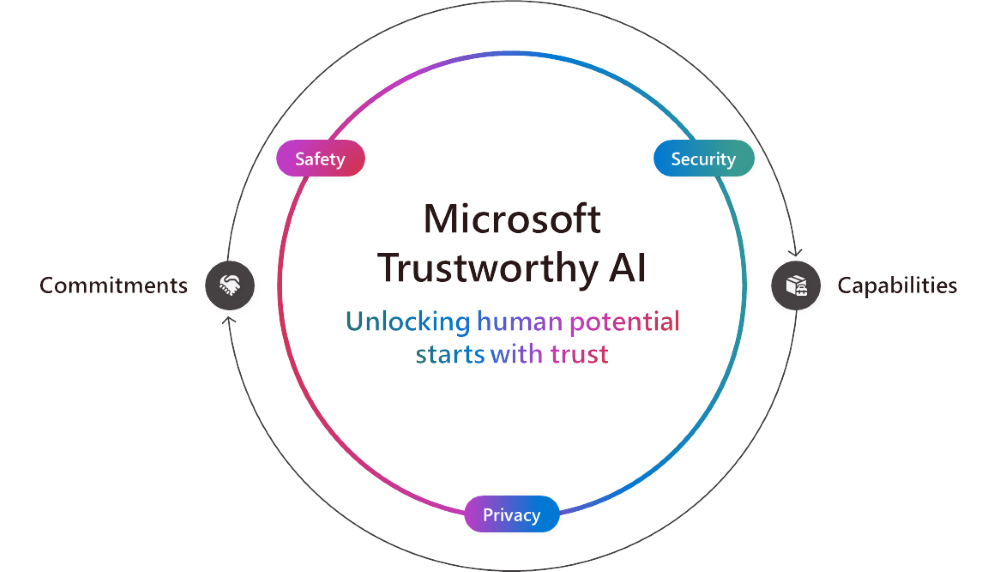

As AI continues to advance, everyone has a role in ensuring that its positive potential is fully realized for organizations and communities worldwide. At Microsoft, we are committed to helping customers build and use AI that is trustworthy—AI that is secure, safe, and private.

Our approach to Trustworthy AI combines strong commitments and cutting-edge technology to protect our customers and developers at every level. Today, we are proud to introduce new capabilities that strengthen the security, safety, and privacy of AI systems.

Security: A Top Priority

Security remains the foundation of our AI strategy at Microsoft. Our expanded Secure Future Initiative (SFI) emphasizes our company-wide responsibility to make our customers more secure. This week, we released our first SFI Progress Report, which highlights updates across culture, governance, technology, and operations, all guided by three key principles: secure by design, secure by default, and secure operations.

In addition to our core offerings like Microsoft Defender and Purview, we provide robust security features within our AI services, including built-in protections to prevent prompt injections and copyright violations. Today, we’re unveiling two new security capabilities:

Evaluations in Azure AI Studio for proactive risk assessments.

Microsoft 365 Copilot transparency features to give admins and users better visibility into how web searches enhance Copilot responses. Coming soon.

Our customers are already benefiting from these capabilities. Cummins, a global leader in engine manufacturing, has turned to Microsoft Purview to enhance their data security and governance through automated data classification and tagging. EPAM Systems, a major player in software engineering, deployed Microsoft 365 Copilot for 300 users, confident in the data protection provided by Microsoft Purview. J.T. Sodano, Senior Director of IT at EPAM, noted, “We were a lot more confident with Copilot for Microsoft 365 compared to other large language models because we know that our data protection policies in Microsoft Purview apply to Copilot.”

Safety: Building AI Responsibly

At Microsoft, we’ve adhered to our Responsible AI principles since 2018, which guide how we build and deploy AI safely across the company. This means rigorously testing and monitoring systems to prevent harmful content, bias, and other unintended consequences. Over the years, we’ve made substantial investments in the governance, tools, and processes needed to uphold these principles, and we share these insights with our customers to ensure they can build safe AI applications.

Today, we are announcing new tools to help organizations harness AI’s benefits while minimizing risks:

Correction Capability in Microsoft Azure AI Content Safety to fix hallucination issues in real time before users encounter them.

Embedded Content Safety for on-device scenarios, ensuring safe AI use even when cloud connectivity is intermittent.

New Evaluations in Azure AI Studio to assess the quality and relevancy of AI outputs and detect protected material.

Protected Material Detection for Code, now in preview, to help developers explore public source code while ensuring responsible usage.

Customers like Unity, a leading platform for 3D game development, use Microsoft Azure OpenAI Service to build Muse Chat, an AI assistant that makes game creation easier and safer. Similarly, UK-based retailer ASOS uses Azure AI Content Safety to enhance customer interactions, filtering inappropriate content through AI-driven apps.

In education, New York City Public Schools partnered with Microsoft to pilot a safe AI chat system, and the South Australia Department for Education brought generative AI into classrooms with EdChat, built on the same secure infrastructure.

Privacy: Ensuring Data Protection and Compliance

Data is the cornerstone of AI, and protecting customer data is paramount. Microsoft’s long-standing privacy principles—control, transparency, and regulatory compliance—guide our efforts. Today, we’re expanding our privacy capabilities with:

Confidential Inferencing in Azure OpenAI Service’s Whisper model, ensuring end-to-end privacy during the inferencing process, which is crucial for regulated industries like healthcare, finance, and manufacturing.

General Availability of Azure Confidential VMs with NVIDIA H100 Tensor Core GPUs, providing encrypted data protection directly on the GPU. This supports secure AI development in highly regulated environments.

Azure OpenAI Data Zones for the EU and U.S., offering customers more control over data processing and storage within their respective regions.

Organizations like F5, a leader in application security, use Azure Confidential VMs with NVIDIA GPUs to build secure AI-powered solutions. Meanwhile, the Royal Bank of Canada (RBC) has integrated Azure confidential computing to analyze encrypted data while preserving customer privacy.

Achieve More with Trustworthy AI

We all need and expect AI we can trust. Microsoft is committed to empowering customers to unlock the potential of AI securely, safely, and privately. From reshaping business processes to reimagining customer engagement, the impact of trustworthy AI is far-reaching.

Our new capabilities in security, safety, and privacy enable organizations to harness the power of AI while maintaining trust at every level. Trustworthy AI is integral to Microsoft’s mission as we strive to expand opportunity, protect fundamental rights, and foster sustainability across all our efforts. By building AI solutions that reflect these values, we help every person and organization on the planet achieve more.